DeepSeek-V3 vs Claude 3.5 Sonnet: Which AI Model Actually Delivers?

If you're choosing between DeepSeek-V3 and Claude 3.5 Sonnet for your next AI project, here's the deal: one is open-source and cost-effective, the other is more powerful and polished — but at a steep cost.

Let’s break it down 👇

🆚 Model Overview

DeepSeek-V3 is an open-source Mixture-of-Experts (MoE) model with 671B total parameters (37B active per token). It’s trained on 14.8 trillion tokens and optimized for fast, efficient inference — with a 128K context window, and is available via APIs from DeepSeek, RedPill,HuggingFace.

Claude 3.5 Sonnet, from Anthropic, is proprietary and built for general-purpose reasoning and tool use. It supports a 200K token context, performs strongly in code generation, and is available via APIs from Anthropic, RedPill, Amazon, and Google.

✅ Claude has better performance.

✅ DeepSeek is open-source and cost-effective.

💸 Pricing Breakdown

| DeepSeek-V3 | Claude 3.5 Sonnet | |

| Input Cost | $0.14 / 1M tokens | $3.00 / 1M tokens |

| Output Cost | $0.28 / 1M tokens | $15.00 / 1M tokens |

Claude is ~43x more expensive than DeepSeek on both input and output tokens. That’s a major consideration if you’re deploying at scale.

📊 Benchmark Comparison

Compare performance metrics between DeepSeek-V3 and Claude 3.5 Sonnet (new). See how each model performs on key benchmarks measuring reasoning, knowledge and capabilities.

| Benchmark | DeepSeek-V3 | Claude 3.5 Sonnet | |

| MMLU | Massive Multitask Language Understanding - Tests knowledge across 57 subjects including mathematics, history, law, and more | 88.5% EM Source | 89.3% 0-shot CoT Source |

| MMLU-Pro | A more robust MMLU benchmark with harder, reasoning-focused questions, a larger choice set, and reduced prompt sensitivity | 75.9% EM Source | 78% 0-shot CoT Source |

| MMMU | Massive Multitask Multimodal Understanding - Tests understanding across text, images, audio, and video | Not available | 71.4% 0-shot CoT Source |

| HellaSwag | A challenging sentence completion benchmark | 88.9% 10-shot Source | Not available |

| HumanEval | Evaluates code generation and problem-solving capabilities | 82.6% pass@1 Source | 93.7% 0-shot Source |

| MATH | Tests mathematical problem-solving abilities across various difficulty levels | 61.6% 4-shot Source | 78.3% 0-shot CoT Source |

| GPQA | Graduate-level Physics Questions Assessment - Tests advanced physics knowledge with Diamond Science level questions | 59.1% pass@1 Source | Not available |

| IFEval | Tests model's ability to accurately follow explicit formatting instructions, generate appropriate outputs, and maintain consistent instruction adherence across different tasks | 86.1% Prompt Strict Source | Not available |

➡️ Claude 3.5 Sonnet consistently outperforms in reasoning, math, and especially coding tasks.

➡️ DeepSeek-V3 excels in affordability, open access, and a few edge-formatting use cases.

🤔 So, Which One Should You Choose?

Choose Claude 3.5 Sonnet if:

- You need top-tier performance in complex reasoning or code generation.

- You're building production-grade tools like agents, tutors, or assistants.

- You're okay with paying for premium performance and longer context.

Choose DeepSeek-V3 if:

- You're building cost-sensitive apps and care about open-source flexibility.

- You want solid performance at a fraction of the price.

- You’re experimenting with custom deployments or fine-tuning.

❓ Quick FAQ: Claude 3.5 Sonnet vs DeepSeek-V3

Q: Which model performs better overall?

A: Claude 3.5 wins on most benchmarks — especially for reasoning, coding, and tool use.

Q: Is DeepSeek open-source?

A: Yes. DeepSeek-V3 is fully open and available on HuggingFace for free use and fine-tuning.

Q: How does pricing compare?

A: Claude is significantly more expensive — around 43x more per token than DeepSeek.

Q: Can I use Claude via cloud providers?

A: Yes. Claude 3.5 is accessible via Anthropic’s API, and also through RedPill, Amazon Bedrock and Google Cloud Vertex.

Q: Which one is better for cost-sensitive projects?

A: DeepSeek is the clear winner on affordability and still holds up for many general-purpose tasks.

Q: What’s the context window for each model?

A: Claude supports 200K tokens, while DeepSeek supports 128K — both are great for long prompts.

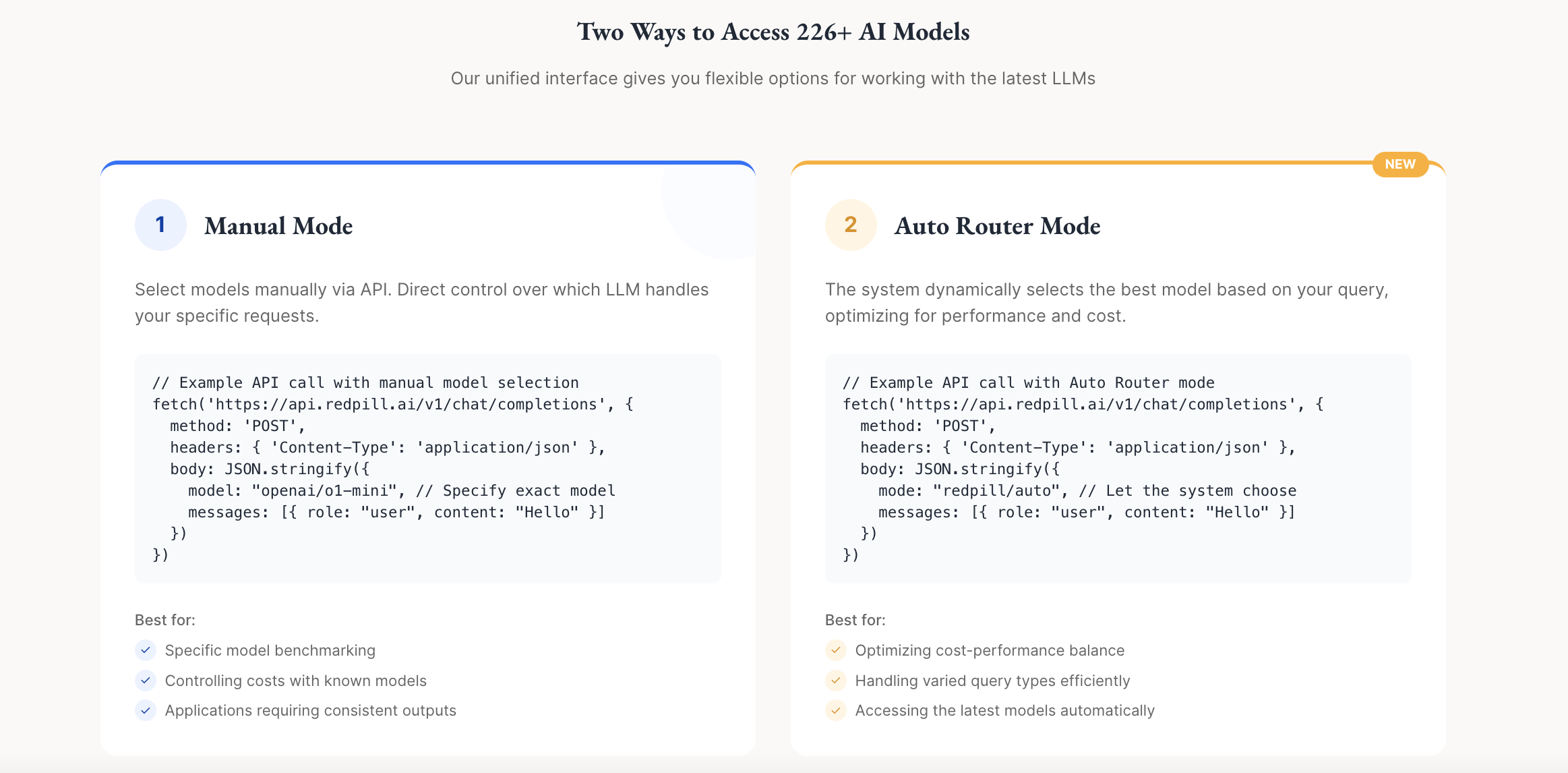

🔀 Want to Use Both Without Switching APIs?

RedPill is a smart AI router that lets you access Claude, DeepSeek, GPT-4o, Mixtral, Gemini, and 200+ LLMs — all through one unified API.

With Auto Router, RedPill can:

- Automatically pick the best model for your prompt

- Optimize for speed, cost, or performance

- Provide cryptographic verifiability

Don't pick sides — route smarter.